Smurfs and Data Mining

Anatella can be fast, very fast, or incredibly fast. It’s up to you to use it right.

What do Smurfs and Anatella have in Common? They are both from Belgium. And they both show that when it comes to winning, size is not the most important: working together is. And we show here that doing things right can have an huge impact: from 106 seconds to 13 seconds!

Anatella is built for speed, and all those who have used it have experienced it. And it is so easy to use that we often think that we don’t need to think about optimizing our scripts. And in many cases, it is true (because honestly, whether we run 5 million records in 12 seconds instead of 40 does not matter that much). But sometimes having a fast process is not enough, and the volume of data we are dealing with is so large that even the super processing power of Anatella is not enough. We want to think in a collaborative way. After all, no Smurf ever escaped Gargamel by working alone. And for very fast processing, Anatella offers the little blue man!

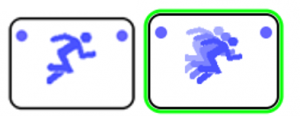

This “Little Blue man” (a.k.a. MultiThreadRun, in its boring technical name) allows Anatella to manage distributing computing to several cores. While the “single” running man allows each core to take care of a particular task, when you set the “thread” to a value >1, multiple cores get together to solve a single problem.

Why does it matter? Let’s take a small example.

Let’s say we’d like to make two aggregates, one per city (100), one per region (10). Typically, this will involve quite a lot of resource, and with 10.000.000 records – on a humble i5 with 8Gb of Ram – it can take quite some times.

So, as any normal Anatella user would do, I will of course attempt this on my laptop without even thinking about running this query in SQL.

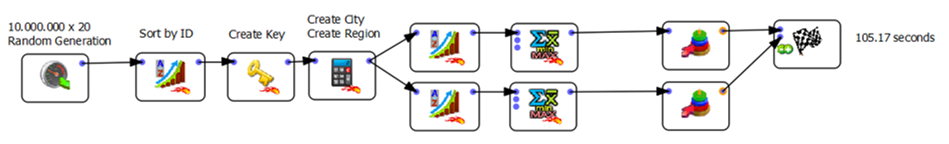

Let’s first make it very “naively” (and quite inefficiently) by sorting, aggregating and finally writing the files:

Here we use two boxes of aggregation, one for “city”, one for “region”, and we run both of them sequentially. It takes about 106 seconds, not too bad to process 10.000.000 records on a laptop! But in this case, we need to wait until the first file is saved before we can process the second aggregation. Quite a bad use of resources.

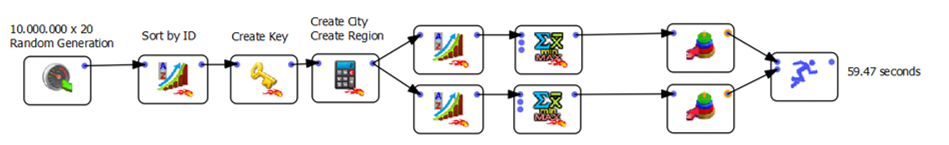

Let’s try this one. All I change is using a Little Blue Man instead of the flag (parallel instead of sequential):

My little blue men are now running together, and I actually processed all the data in only 59 seconds. Not Bad! Who is the man? Papa Smurf is the man!

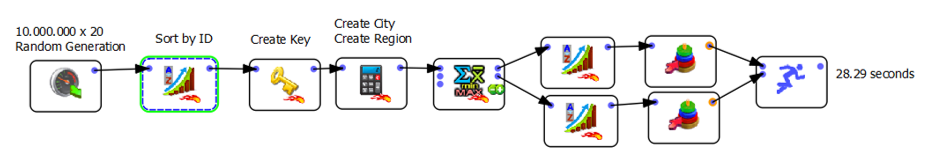

But while I’m at it, I can still see it is inefficient. I’m sorting the entire database 3 times, hence forcing HD access where I could try to work in RAM (because an aggregation on a few thousand categories is still a relatively small task… that 8 Gb of RAM will handle flawlessly). So… let’s put our aggregate action with the “In-Ram” option and see what happens.

BAM! From 106 seconds to 28 seconds. Note that if we could get rid of the first “Sort by ID”, the operation would only have lasted less than 13 seconds… and with such small amount of data to sort after the aggregate, the final operation only takes 0.03 seconds… in parallel or not! So, even before you think about calling papa Smurf for help, try to Randomly Access your Memory, and write a more efficient graph!