MODELER

AUTOMATED MODELING

ACCURATE & FAST

Get the best conversion and retention rate and the best risk estimate in seconds.

POWERFUL

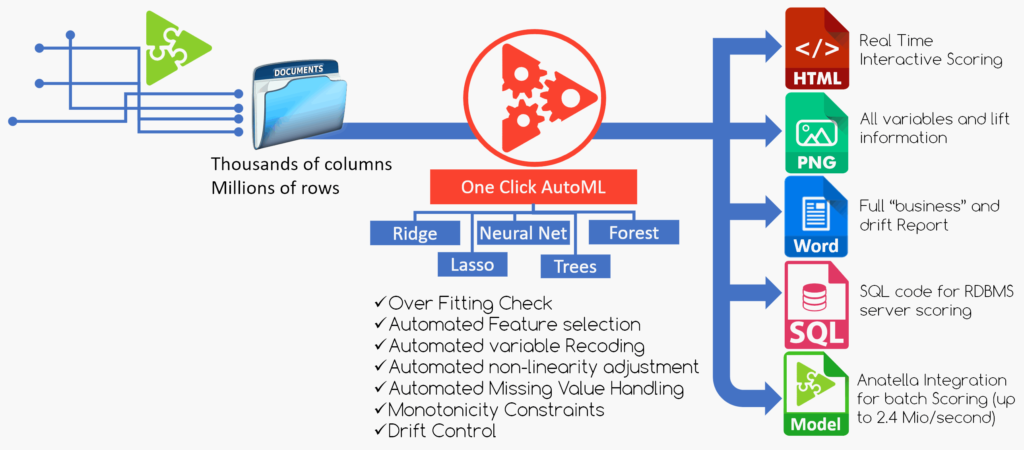

No limit in the learning dataset: Modeler manages tens of thousands of variables and millions of rows.

AUTOMATED

Benefit from over 20 years of Data Science expertise integrated directly into Modeler, with just a few clicks.

ACTIONABLE

Using Modeler is the guarantee to see your models leave the “data lab” and go into production, in one drag & drop.

MODELER, IT’S THE PLEASURE:

- to immediately gain a better understanding of its target and its business,

- to challenge one’s preconceptions or beliefs,

- to appreciate the unexpected,

- to discover hidden patterns in one’s data,

- to validate one’s choices and the relevance of one’s feature engineering

- to test the most original scenarios.

WHY MODELER

Unmatched Accuracy and Speed

The more relevant your selection of variables is, the more you optimize your meta-parameters, the better your results will be. Thanks to its 100% multi-threaded, vectorized and cache-optimized engine, Modeler tests more than 15,000 different models in a few seconds to finally deliver the best model.

Read moreScalable

Modeler also easily manages very large data sets with more than 30,000 variables and several million rows. Its proprietary data compression engine typically allows it to store a 1 GB dataset in less than 50 MB of RAM.

Read moreStable

The algorithms used in Modeler are mostly insensitive to dirty data (outliers and outliers) or missing data. You can use Modeler directly on databases containing your operational data to quickly obtain usable models.

Thousands of models

Modeler automatically creates new variables to increase accuracy. Modeler creates thousands of predictive models and ultimately delivers the best and most stable model. Modeler adjusts all model meta-parameters, calculates confidence intervals and generates all necessary reports.

The 3 types of target

Modeler has 2 predictive engines: one engine for binary targets and the other for continuous targets. When you combine Modeler with the single assignment solver box (in Anatella), Modeler also becomes one of the best solutions for predicting multi-class targets.

Low risk of overfitting

Modeler integrates the 4 best technologies to fight overfitting, taking into account the structure of the delivered model. In 99% of cases, Modeler automatically provides a model without overfitting. If you still see overfitting, you can simply adjust the modeling meta-parameters to remove it.

Read moreWhite box and ethics

Modeler has the reflex to systematically select the most relevant variables in the smallest number. It uses an enhanced “wrapper approach” that tells you exactly what variables are selected. This approach allows you to obtain very precise but also very simple models (with few variables) that are then easy to validate from an ethical point of view thanks to the numerous automatically generated modeling reports.

Transparent

All algorithms and parameters are fully documented and available.

Modeler fully automates the process of optimizing these meta-parameters. However, if you wish, you can switch to manual mode and optimize them at your discretion. This allows you to maintain full control over the entire algorithmic chain used in Modeler.

Easy deployment

Modeler offers dozens of options to help you get your models into production. You can simply drag and drop your “model file” into an Anatella window or export your models to simple SQL code (for in-database scoring) with a mouse click.

BOOST

YOUR PRODUCTIVITY

Simplify and accelerate the process of creating predictive models. Where a team builds a dozen models a year, Modeler enables these same analysts to become true business experts in their companies by building hundreds, even millions of models each year.

Testimonials

“The optimal solution to extract advanced Social Network Algorithms metrics out of gigantic social data graphs.”

“We reduced by 10% the churn on the customer-segment with the highest churn rate.”

“TIMi framework includes a very flexible ETL tool that swiftly handles terabyte-size datasets on an ordinary desktop computer.”