Icon: ![]()

Function: upsertODBC

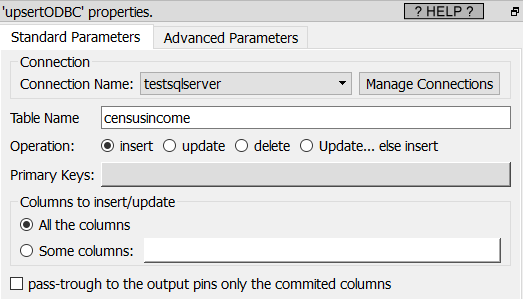

Property window:

Short description:

Insert/update/Delete some rows inside a relational database using the ODBC driver.

Long Description:

This action Insert, Update or Delete some rows inside a relational database using the ODBC driver.

Before executing the ![]() upsertODBC Action, you must first define an ODBC connection: See the section 5.1.6. to know how to create an ODBC connection from Anatella to your database.

upsertODBC Action, you must first define an ODBC connection: See the section 5.1.6. to know how to create an ODBC connection from Anatella to your database.

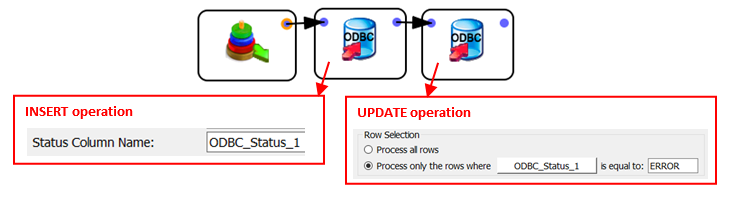

Here is how to do a classical “Upsert” (Insert otherwise Update) operation in an efficient way:

This Anatella-Graph first attempts to insert some new rows into a table inside a relational database. Some of these rows already exist inside our relational database. These rows will trigger an error at each attempt of insertion. These “insertion” errors are signaled inside the output column “ODBC_Status_1”: i.e. the column “ODBC_Status_1” contains “ERROR” for all failed insertion. Thereafter, there is a second “ODBC writer” that takes as input all the rows with an insertion error and performs an “update” on them.

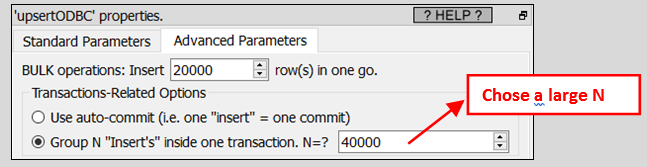

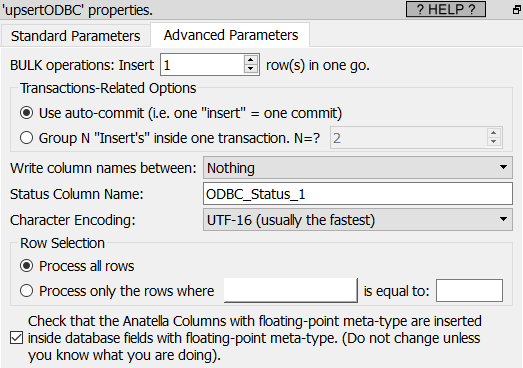

The “Insert”, “Update” and “Delete” operations are sent “in batch” to the database driver. The size of these batches (the number of “Insert”, “Update” and “Delete” operations in a batch) is the parameter is named “BULK operations”. Large-batch-size usually means higher performances but, unfortunately, not all the databases are working properly with large-batch-size. The safest option when working with low-grade database drivers is to set the “BULK operations” parameter to 1.

On most databases, using the “auto-commit” option is the fastest option because it means that the database does not have to manage all the ACID properties related to the processing of TRANSACTIONS. This is not always true: When the “target” table (i.e. the table to modify) has an index structure, it might be faster to use TRANSACTIONS: See the next section about this subject.

![]()

Oddly enough, the most efficient way to INSERT rows inside a “DB2” database is to use large TRANSACTIONS.

Indeed, DB2 is forcing a SYNCHRONIZE operation (i.e. an operation where the content of the database in RAM is “flushed out” to the hard drive, to prevent any data loss in case of electrical power cut) at the end of each and every TRANSACTION. It means that, if you are using “auto-commit”, DB2 peforms a SYNCHRONIZE operation for every row that is INSERT’ed inside the database: This dramatically slows down everything.

Since all SYNCHRONIZE operations are extremely slow, it means that, to get the highest speed when using DB2, the best option is to reduce to the minimum the number of TRANSACTIONS. Thus, the fastest option for DB2 is usually: