The software design of Anatella has been carefully though to obtain one of the fastest ETL tool (maybe the fastest) on “simple commodity hardware”.

There are currently three main different approaches when designing a new ETL tool based on “small graphical boxes”. The main difference between these three approaches resides in the technique used to “transfer” the data-stream from one “little box” to the next one. The common three different approaches are:

1.Description: The different boxes are communicating through a file on the harddrive. Each operator/box reads a file in input and writes a file as output.

Advantages: Each operator can be a totally different executable. When developing the ETL tools, if you assign one developer per “executable/operator”, it’s very easy to find which developer to blame when an operator crashes. Thus the management of the development team that is developing the ETL tool is very easy. This approach allows a fast and cheap development of the ETL tool. This is, of course, a very common approach. Only low-grade ETL’s are using this approach.

Disadvantages: this technique is very slow because the speed of any ETL software is usually (inversely) proportional to the number of disk I/O performed (at least for Analytical tasks). Since you need to write and read the whole data-tables between each box, it generates an enormous amount of I/O, leading to an extremely slow ETL.

Example of ETL tool using this approach: SAS enterprise guide (not SAS base), Amadea.

2.Description: Each box has its own thread. Each arrow between two boxes is a “queued buffer”. Each “thread/box” reads new “rows” on its input queue and writes “processed rows” on the output queue (See section 5.3.2.9. for more information about this “multithreaded” approach). This approach can be described as a “push” approach: each “box” process a row and there after pushes it in the queue for the next box on the right. The classical consumer/producer problem occurs in each input/output queue. The queues must thus have a mechanism (usually based on semaphore and mutex locks) to ensure consistency between threads.

Advantages: All operators are running in parallel, exploiting the multiple CPU’s available on your computer. By default, for simple “row operations”, there are no disk I/O to perform and thus this approach is far more efficient than the previous one.

Disadvantages:

oThere are as many threads running in parallel as the number of “boxes” in your transformation script. The number of active thread inside the ETL tool can thus be quite large. In such situation, the main CPU of your PC will spend a large amount of time in “context switching”: it will lose time switching from one thread to the other. See section 5.3.2.9. for more information about this subject.

oThere exists a large number of “queued buffer” (one for each arrow in the transformation graph). For efficiency reason, each of these buffers must contain several hundreds of rows. If we are manipulating “big” rows (that contains thousands of columns), each of these buffers can requires as much as 100 MB each. This imposes a limit of 20 arrows in the graph in a “common PC hardware” (for example a windows XP 32 bit.) This approach can thus lead to serious memory limitations.

oThis approach requires to perform a deep copy of each Row in the output “queued buffer” that exists between each “box”. This means that for a simple script like this one:

... all the rows are “deep-copied” 5 times in the 5 “queued buffers”. If we are manipulating “big” rows, this “deep-copy” requires a huge amount of CPU power and this slows down significantly the computations.

Example of ETL tool using this approach: CloverETL, Kettle.

3.Description: There is only one thread that runs the whole transformation graph. When a “box” requires a new row to perform its computation, it asks a row to the previous “box”. This approach can be described as a “pull” approach: each “box” pulls a row from the “previous box on the left” and thereafter process it.

Advantages: By default, for simple “row operations”, there are no disk I/O to perform and thus this approach is very efficient. Since there exists only one unique thread to do the whole processing of the whole transformation graph, there is no need for “queued buffers” between each box. This approach is thus a lot less “memory hungry” compared to the previous one. Since there are no “queued buffers” at all, it means that we can also avoid to perform all the deep-copy of all the rows. Since there are no deep copy anymore, the stress on the CPU is greatly minimized and the CPU can be wittingly used to run the “useful” computations: i.e. the CPU is actually used to run the transformations taking place inside the different “boxes”.

Disadvantages: This approach does not directly use all the CPU’s available on your computer. Thus, depending on the type of transformation, it can be slower than the previous approach. In particular, if you have several database extractions to perform, this approach is not efficient at all because it runs each extraction sequentially (instead of simultaneously for the previous approach). This approach is nevertheless far more efficient than the first one.

Example of ETL tool using this approach: Old ETL tools.

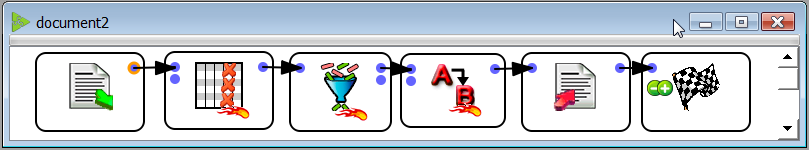

4.Description: This last approach combines the best of the 2 previous approach: it allows multithreaded execution (where you have the exact control of how many CPU are used) and mono-threaded execution (so that each CPU runs at peak efficiency). Anatella uses this approach. Inside Anatella, you have:

▪All the Actions inside the same Multithreaded section run on 1 CPU (i.e. we have mono-threaded execution)

▪We have also multithreaded execution because each Multithreaded section (defined using the ![]() Multithread Action) runs on a different CPU.

Multithread Action) runs on a different CPU.

Advantages: All advantages of the two previous approaches.

Disadvantages: This approach does not directly use all the CPU’s available on your computer. To use all the available CPU’s, you must manually add some ![]() Multithread Actions (or run several different transformations graphs “in parallel”, using the

Multithread Actions (or run several different transformations graphs “in parallel”, using the ![]() ParallelRun Action). Usually, the real speed bottleneck in Anatella is the CPU (all the disk I/O operations are very efficient inside Anatella). To remove this bottleneck, use carefully some

ParallelRun Action). Usually, the real speed bottleneck in Anatella is the CPU (all the disk I/O operations are very efficient inside Anatella). To remove this bottleneck, use carefully some ![]() Multithread Actions. The proper usage of the

Multithread Actions. The proper usage of the ![]() Multithread Actions can very often multiply the speed of the data-transformation process by 8 on simple commodity hardware.

Multithread Actions can very often multiply the speed of the data-transformation process by 8 on simple commodity hardware.

Example of ETL tool using this approach: Anatella (and no other!)

Anatella is one of the very few modern “ETL tools” developed in C. The recent C compilers (like the excellent Microsoft Visual Studio) are astonishing: they are producing executables that usually runs 2 orders of magnitudes faster than any other programming language (except maybe Fortran). This means that all the transformation scripts based on the C/C++ operators available in Anatella (the ones marked with the flame ![]() icon) are unmatched in terms of execution speed. The execution speed of the operators based on the “JavaScripts language” (similar to JavaScript) is also extremely high, thanks to the usage of a highly tuned JIT (just-in-time compiler) named “SquirrelFish Extreme”. The speed of the operators based on “JavaScripts language” is still unfortunately largely inferior to the C operators (but the research in the field of “JavaScript compilers” is still very active and an improved version of the “SquirrelFish Extreme” JIT might appear very soon).

icon) are unmatched in terms of execution speed. The execution speed of the operators based on the “JavaScripts language” (similar to JavaScript) is also extremely high, thanks to the usage of a highly tuned JIT (just-in-time compiler) named “SquirrelFish Extreme”. The speed of the operators based on “JavaScripts language” is still unfortunately largely inferior to the C operators (but the research in the field of “JavaScript compilers” is still very active and an improved version of the “SquirrelFish Extreme” JIT might appear very soon).

We have invested a great amount of time designing and developing one of the most flexible and fastest data transformation tool available on the market. Indeed the processing speed reached by Anatella on simple commodity hardware is impressive. Thanks to a unique software design, Anatella is able to flawlessly process in a few seconds extremely large tables containing thousands of columns. This ability is vital when working on analytical tasks, such as predictive datamining.