|

<< Click to Display Table of Contents >> Navigation: 5. Detailed description of the Actions > 5.9. Text Mining > 5.9.4. Bag of Word (High-Speed

|

Icon: ![]()

Function: BagOfWord

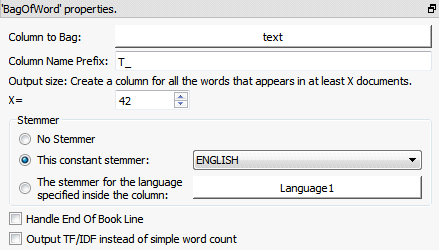

Property window:

Short description:

Bag-Of-Word: Structure unformatted text fields.

Long Description:

The objective is to extract from the text field a table that can be used inside TIMi to create a predictive model.

Typical usage scenariis include building models for:

•Churn/Cross-Selling/Up-Selling modeling: We'll complement the customer profiles by adding some fields (usually around 10.000 new fields) coming from text analytics. The main objective here is to increase the quality of the predictive models (to get more ROI out of it). For example, we can easily build high-accuracy Predictive Models that looks at a text database containing the calls made to your "hot line". If these texts contain words like "disconnections", "noisy", "unreachable", etc. it can be a good indicator of churn (for a telecom operator).

•Document classification: We'll automatically assign tags to documents.

To be able to create predictive models based on text, classical analytical tools are forcing you to define "Dictionnaries". These "Dictionnaries" contain a limited list of words pertaining to the field of interest. Each of these word/concept directly translates to a new variable added to the analytical dataset used for modeling (i.e. to the dataset injected into TIMi). Typically, you can't have more than a few hundreds different words or concept because classical modeling tools are limited in the number of variables that they can handle.

In opposition, TIMi supports a practically unlimited number of variables. This allows us to use a radically different approach to text mining. Instead of limiting the construction of the analytical dataset to a few hundred words/concepts, we'll create an analytical dataset that contains ALL the words/concepts visible inside the text corpus to analyze (and even more, if desired)(To be more precise, we are using the Bag-of-Word principle on the set of all stemmed words of the text corpus. Our stemmer supports a wide variety of languages). This approach has several advantages over the classical approach (used in classical tools such as SAS, SPSS, etc):

•We don't need to create "Dictionnaries" because TIMi will automatically select for us the optimal set of words and concepts that are the best to predict the given target. Since we are using an optimal selection, rather than a hand-made approximative selection, the preditive models delivered by TIMi are more accurate (and thus generates more ROI).

•We gain a large amount of man-hours because it's not required anymore to create "Dictionnaries". "Dictionnaries" are context-sensitive: For each different tasks/predictive target, you need to re-create a new dictionnary (i.e. you need to select manually the columns included inside the analytical dataset rather than let TIMi automatically select them for you). Creating new Dictionnaries is a lengthy boring task, easily prone to errors.

•The process is less expensive because you don’t need to “buy” these dectionnaries from an external provider: In paticular, SPSS has a big focus on dictionnaries: You can buy pre-made dictionnaries and some SPSS tools are focusing only on the creation of dictionnaries.